Summary

AI reasoning models are specialized large language models (LLMs) that go beyond simple text generation to perform complex reasoning tasks by breaking down problems into steps and using logical thought processes, similar to how humans reason.

OnAir Post: AI Reasoning Models

About

Overview

- What they are:AI reasoning models are a type of LLM trained to not just generate text, but to also engage in logical reasoning and problem-solving.

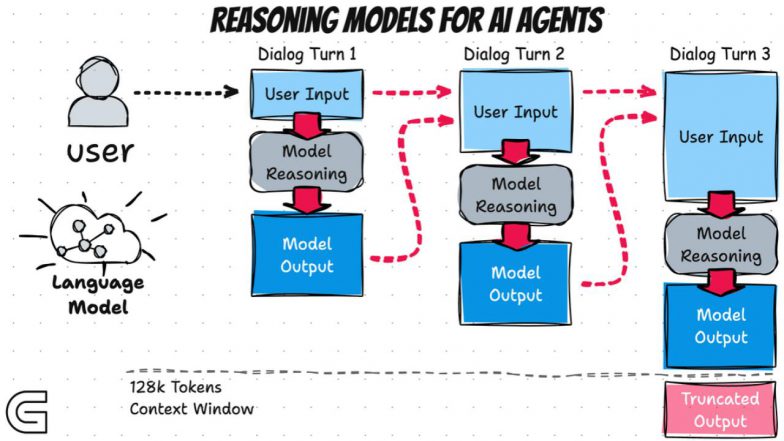

- How they work:Instead of directly generating answers, they break down complex problems into smaller, manageable steps, analyze the information, and arrive at a solution through a “chain of thought” process.

- Key Features:

- Chain-of-Thought Prompting: They are trained to show their reasoning process, making their thought process more transparent.

- Self-Improvement: Some models incorporate mechanisms that allow them to learn and improve their reasoning abilities over time.

- Test-Time Thinking: They can make real-time adjustments to their reasoning process based on the specific context of the problem.

- Chain-of-Thought Prompting: They are trained to show their reasoning process, making their thought process more transparent.

- Examples:OpenAI’s o1 and DeepSeek R-1 are examples of reasoning models that can handle complex tasks in mathematics, science, and coding.

- Benefits:

- Improved Accuracy: By breaking down problems and reasoning through them, these models can achieve higher accuracy in complex tasks.

- More Human-Like Reasoning: Their ability to show their reasoning process makes them more akin to human problem-solving.

- Better Understanding: They can provide insights into how they arrived at a solution, which can be valuable for debugging and understanding the model’s decisions.

- Improved Accuracy: By breaking down problems and reasoning through them, these models can achieve higher accuracy in complex tasks.

- Applications:Reasoning models are being used in various fields, including legal analysis, financial modeling, and scientific research.

Source: Google AI Overview